Dominick Reilly

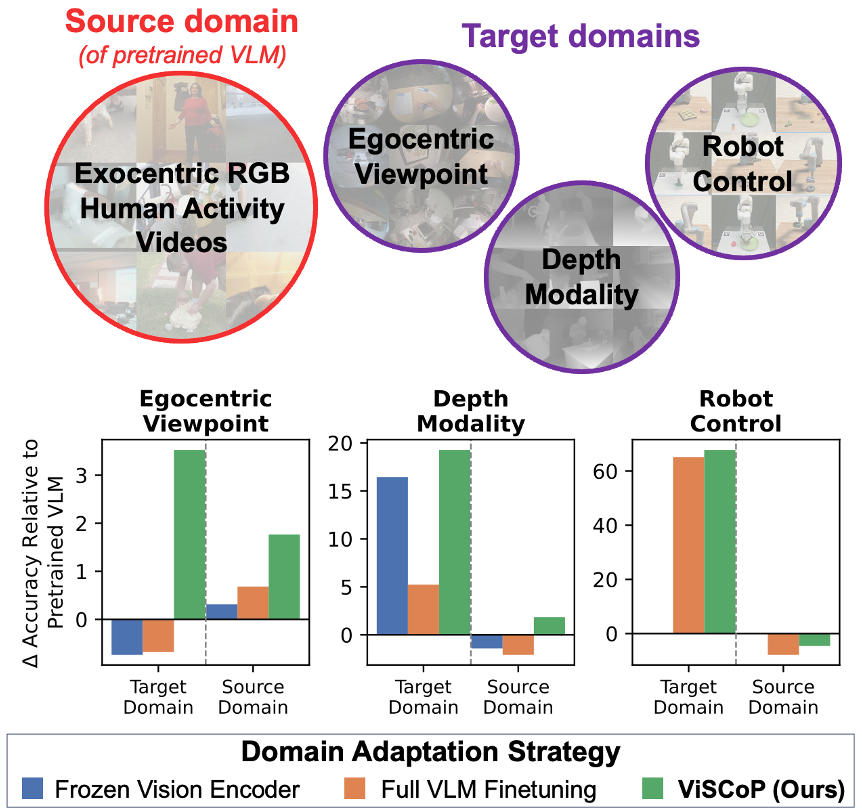

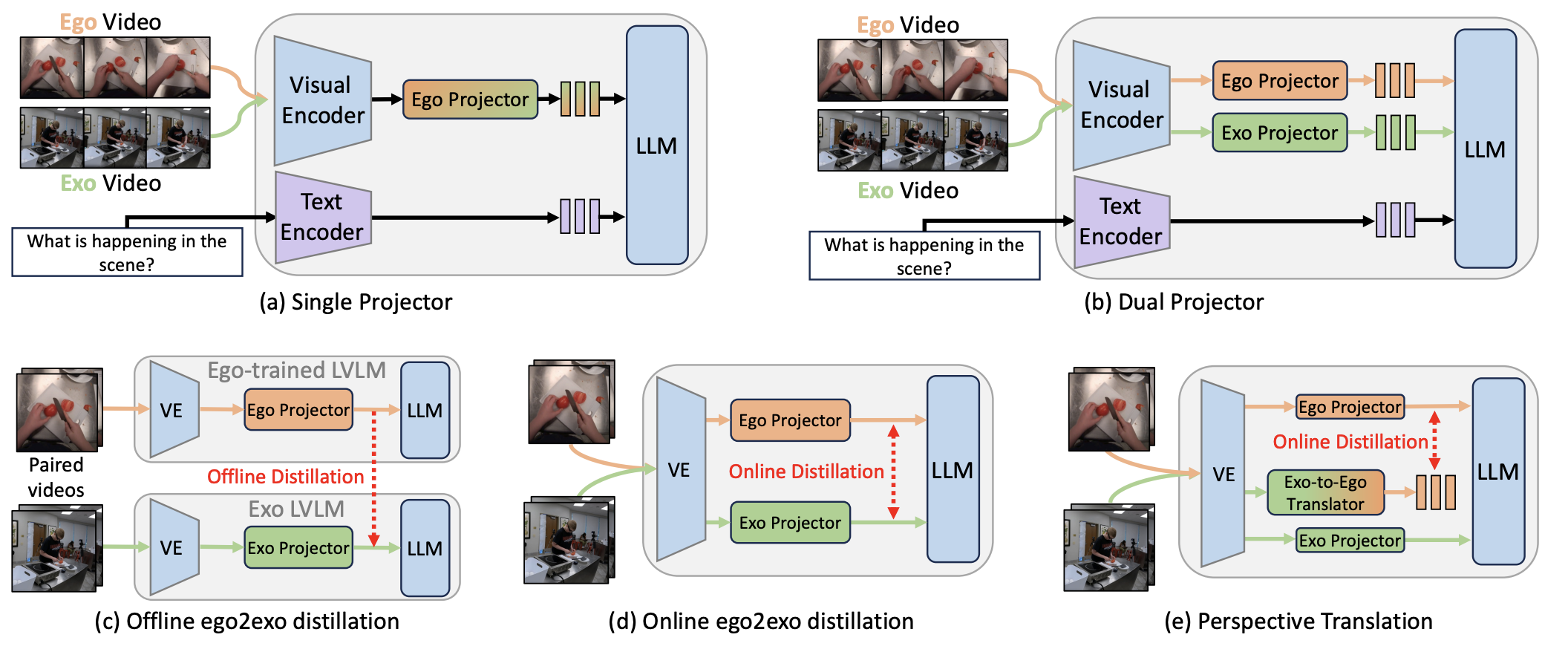

Hello, I am a fourth-year PhD student in Computer Science at the University of North Carolina at Charlotte advised by Dr. Srijan Das, and am a member of the Charlotte Machine Learning Lab (CharMLab). My current focus is on multi-modal learning in Vision Language Models (VLMs) for video understanding and robotic control. I have worked on tasks including ego-exo viewpoint transfer, cross-modal domain adaptation, and fine-grained action understanding. I am interested in developing simple methods that are generalizable and scalable.

News

| Dec 2025 | Started research internship at the Sony Creative AI Lab working with the Multimodal NLP Team! |

|---|---|

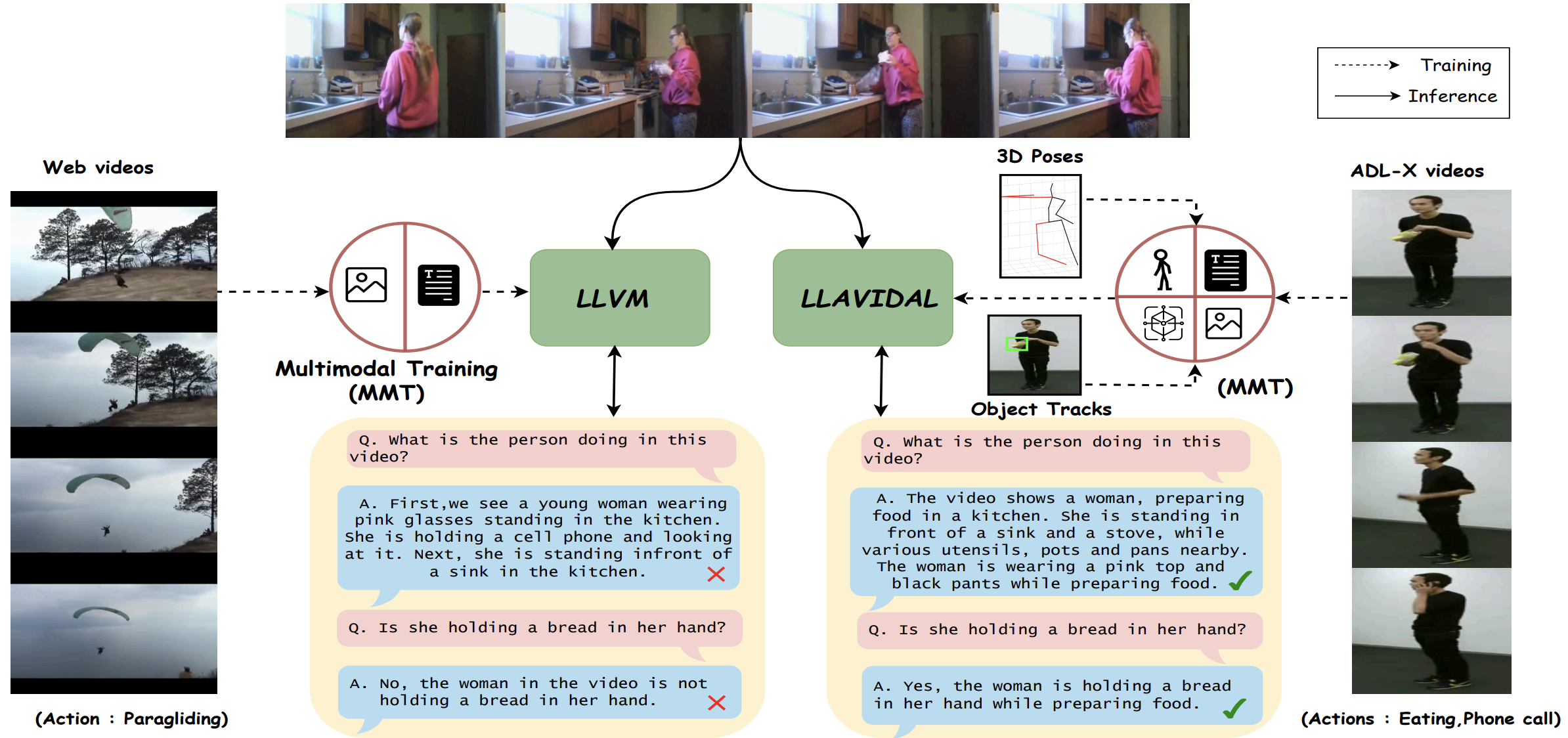

| Feb 2025 | One paper, “LLAVIDAL: A Large LAnguage VIsion Model for Daily Activities of Living”, is accepted to CVPR 2025! |

| Dec 2024 | One paper, “SKI Models: Skeleton Induced Vision-Language Embeddings for Understanding Activities of Daily Living”, is accepted to AAAI 2025! |

| Jun 2024 | Started summer internship at Honda Research Institute in San Jose, California as a student researcher! |

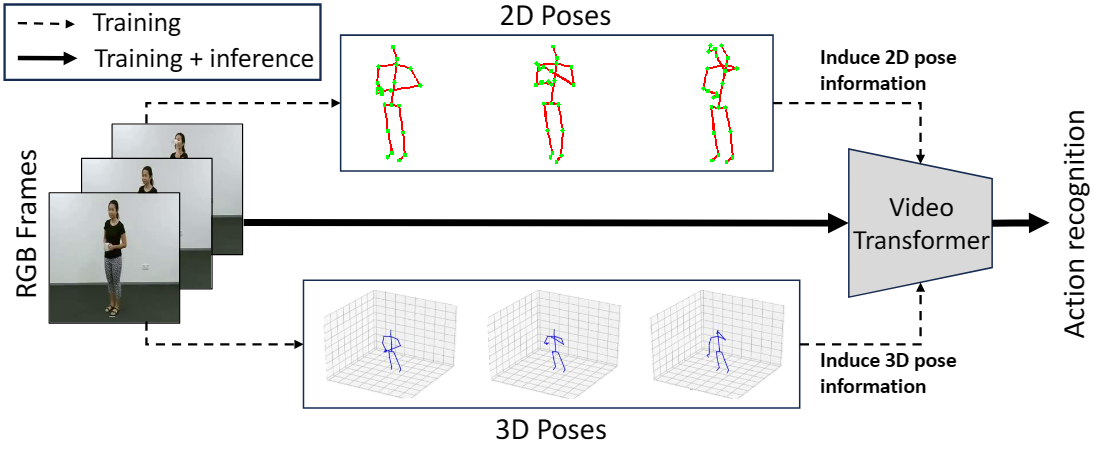

| Feb 2024 | One paper, “Just Add π! Pose Induced Video Transformers for Understanding Activities of Daily Living”, is accepted to CVPR 2024! |